Top AI Crawlers to Watch in 2025: How to Optimize for Them Using LLMs.txt

- Posted 6 days ago

As artificial intelligence continues to evolve and shape the digital landscape, AI crawlers are becoming an integral part of how content is indexed, analyzed, and served to users. These advanced bots are designed to crawl websites, understand content, and enhance user experiences in various domains, from search engines to content recommendations and data analysis. In 2025, it’s essential to stay updated on the key AI crawlers and optimize your website for their interaction with your content. This blog post dives into the most prominent AI crawlers to watch in 2025, how they work, and how you can optimize your site for them using LLMs.txt and HTTP headers.

The Rise of AI Crawlers

AI crawlers, also known as bots or web spiders, are evolving beyond the traditional search engine crawlers. These AI-powered bots are more sophisticated in how they understand and process the content on the web. Rather than just indexing text, AI bots analyze and interpret context, sentiment, and relevance, leading to more personalized results and recommendations. As a result, webmasters and SEO professionals must adapt their strategies to ensure their websites are friendly to these intelligent crawlers.

Common AI Crawlers You Should Know

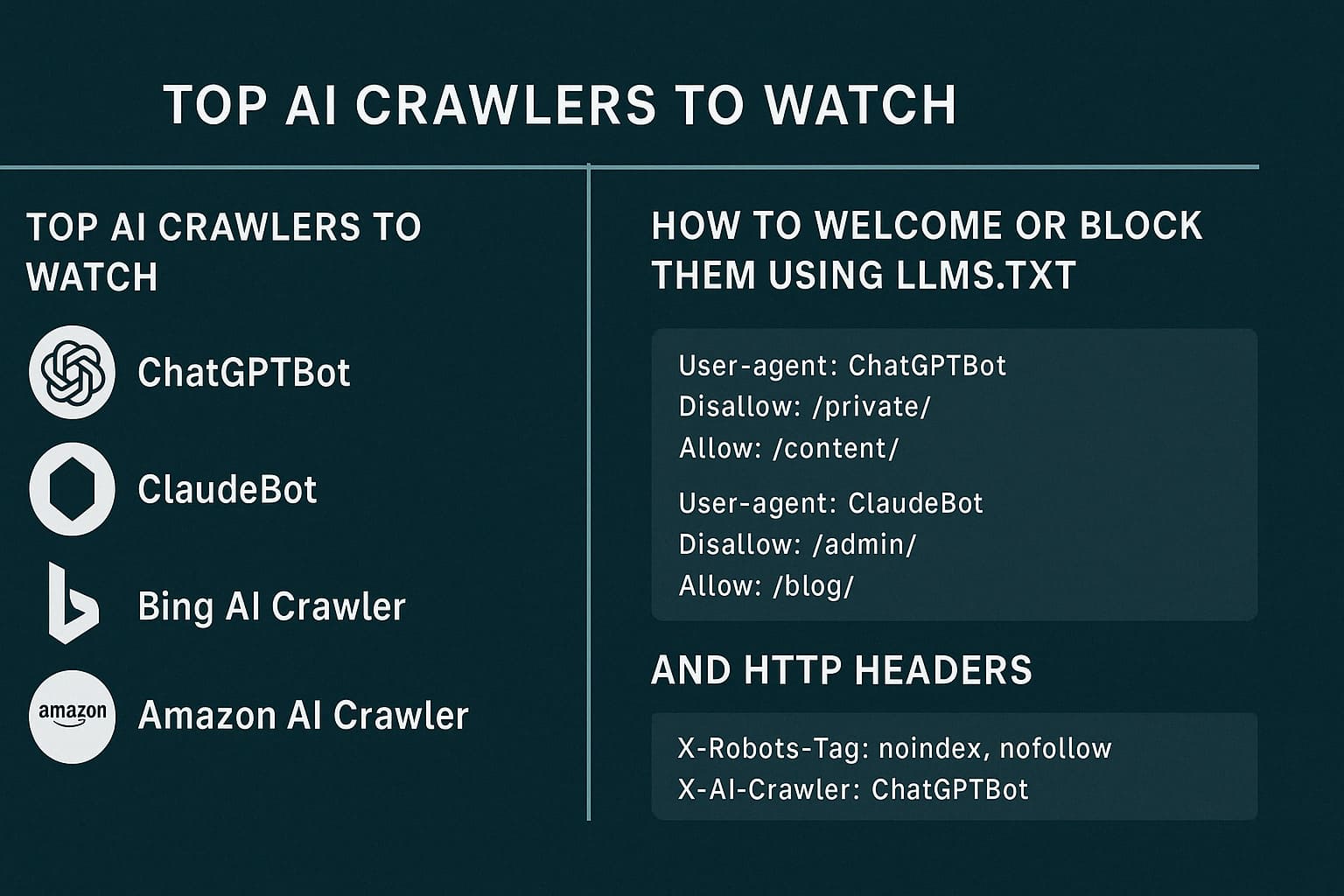

Several AI bots are making waves in the digital space, each with a unique approach to crawling and indexing web content. Let’s take a look at some of the most prominent AI crawlers to watch out for in 2025.

1. ChatGPTBot

ChatGPTBot, developed by OpenAI, is a web crawler powered by large language models like GPT. It uses advanced natural language processing (NLP) to understand the content it crawls and helps create personalized user experiences, including generating answers and recommendations. This bot is designed to help enhance the interaction between users and content by better understanding context and intent. If your site is not optimized for ChatGPTBot, it could result in your content being under-indexed or misunderstood.

2. ClaudeBot

ClaudeBot, developed by Anthropic, is another AI-powered crawler that operates with a focus on ethical AI and safe browsing practices. Similar to ChatGPTBot, ClaudeBot is designed to better interpret the meaning behind content, ensuring that web pages are indexed with a deeper understanding of context. It is known for its transparency and ability to process complex content with minimal bias, making it an important crawler for content-driven sites in 2025.

3. GeminiBot

GeminiBot, from Google’s AI division, is another significant AI bot to watch in 2025. GeminiBot is unique because it integrates Google’s deep machine learning algorithms to understand and analyze web pages in a more human-like way. With the ability to learn from the content it processes, GeminiBot excels at predicting content relevance, helping users find better results and making the web more intuitive.

4. Bing AI Crawler

Microsoft’s Bing has also embraced AI in a big way with its AI-powered crawler that uses algorithms to understand user intent and improve results for search queries. With the increasing use of AI in its services, optimizing for Bing’s AI crawler is critical for SEO in 2025. The bot focuses on content relevance and quality, ensuring that websites with high-quality, user-focused content rank better.

5. Amazon AI Crawler

Amazon’s AI-powered crawler is primarily focused on crawling e-commerce websites and analyzing product listings. It uses AI to better understand consumer behavior and preferences, which directly impacts how products are recommended. E-commerce websites must be mindful of Amazon’s AI crawler to stay competitive in the ever-growing online marketplace.

How to Optimize for AI Crawlers Using LLMs.txt

As AI crawlers continue to evolve, webmasters must adapt to new methods for managing these bots. One such method is LLMs.txt, a file format designed to control AI bots’ access to certain parts of your website. This file helps define how your site interacts with AI-powered crawlers and ensures they are indexed in a way that best suits your SEO strategy.

1. What is LLMs.txt?

LLMs.txt is a text file that allows website owners to specify rules for how AI crawlers interact with their site. It is a powerful tool for directing AI bots to crawl or avoid certain parts of your website, helping ensure that sensitive information, duplicate content, or non-relevant pages are not indexed. Just like the traditional robots.txt file for search engines, LLMs.txt gives you greater control over AI crawler interactions, ensuring that your website is optimized for specific bots like ChatGPTBot, ClaudeBot, and others.

2. How to Welcome AI Crawlers Using LLMs.txt

To ensure AI crawlers can access and index your site correctly, you should provide them with clear instructions in the LLMs.txt file. Here’s a simple example of what your LLMs.txt file might look like:

txtCopyUser-agent: ChatGPTBot

Disallow: /private/

Allow: /content/

User-agent: ClaudeBot

Disallow: /admin/

Allow: /blog/

In this example, the ChatGPTBot is allowed to crawl the “/content/” section but is blocked from the “/private/” section. Similarly, ClaudeBot is allowed to crawl the “/blog/” section while being blocked from the “/admin/” section.

This approach gives you control over what content is indexed and ensures that AI bots can understand your site’s structure and relevance.

3. How to Block AI Crawlers Using LLMs.txt

In some cases, you may not want certain AI crawlers to access your site. You can block specific crawlers using the “Disallow” directive in LLMs.txt. Here’s how:

txtCopyUser-agent: GeminiBot

Disallow: /

This rule will prevent GeminiBot from crawling any pages on your website. While blocking certain bots may be beneficial in some cases (for example, if you have sensitive content you don’t want indexed), you should be cautious, as blocking valuable crawlers can hurt your site’s visibility in AI-powered platforms.

Enhancing Your Website with HTTP Headers

In addition to using LLMs.txt, HTTP headers provide an additional layer of control over how AI crawlers interact with your website. By setting custom HTTP headers, you can specify rules for bot interaction, define cache settings, and even provide metadata that can help AI crawlers better understand your content.

1. Using HTTP Headers for Access Control

HTTP headers can be used to manage bot access. For example, you can use the “X-Robots-Tag” header to tell AI crawlers whether to index a page or follow its links. Here’s an example of how to block a crawler:

httpCopyX-Robots-Tag: noindex, nofollow

This tells AI bots not to index the page or follow any links within it. You can also use the “Cache-Control” header to control how AI bots store the page for future requests.

2. Using Custom Headers for AI Crawlers

You can define custom headers for specific crawlers to ensure that they process your content in the way you intend. For instance, you might include a header like this:

httpCopyX-AI-Crawler: ChatGPTBot

This custom header could help the ChatGPTBot understand that it should treat the page differently or apply specific NLP algorithms for indexing and analysis.

Read Also : Can LLMs.txt Improve Your Website’s AI Rankings? (Case Studies + Experiments)

Conclusion

In 2025, AI crawlers will continue to shape the way we interact with content on the web. Understanding how to optimize your site for these advanced bots is crucial to ensure that your content is indexed accurately and appears in relevant AI-driven results. By using tools like LLMs.txt and HTTP headers, you can manage how bots like ChatGPTBot, ClaudeBot, and GeminiBot interact with your website, enhancing your SEO strategy and improving the overall user experience.

As AI continues to play a dominant role in the digital ecosystem, it’s essential to keep your site’s structure and content optimized for these intelligent bots. By doing so, you’ll be better positioned to take advantage of the opportunities these AI crawlers present in 2025 and beyond.